UNSUPERVISED LEARNING

What is Unsupervised Learning in Machine Learning ?

So, a method which draws output from unlabelled data also. A sort of machine learning method called unsupervised machine learning is used to make conclusions from datasets that contain input data without labelled replies. Unsupervised learning uses data without pre-existing labels, in contrast to supervised learning, which involves training a model on labelled data. Finding hidden patterns, groupings, or features in the data is the main objective.

EXAMPLE: let's say the unsupervised learning algorithm is provided with an input dataset that includes pictures of various breeds of dogs and cats. The algorithm has no knowledge of the dataset's characteristics because it has never been trained on the provided dataset. The unsupervised learning algorithm's job is to let the image features speak for themselves. This work will be completed using an unsupervised learning algorithm that organises the image collection based on image similarities.

Reasons Behind for using Unsupervised Learning:

- Unsupervised learning helps in identifying hidden patterns and structures in data without any prior assumptions. This is crucial for understanding the inherent distribution and relationships within the data.

- Helps in identifying the most important features, thus

reducing the dimensionality of the data and potentially improving the

performance of supervised learning models.

- Unsupervised learning can identify user preferences and

behaviour patterns to provide personalized recommendations without needing

explicit feedback.

- Identifying unusual patterns or outliers in transaction data

can help detect fraudulent activities.

Working:

In this case, the incoming data is unlabelled, meaning it is not categorised and no corresponding outputs are provided. The machine learning model is now fed this unlabelled input data in order to train it. It will first analyse the raw data to identify any hidden patterns before applying the appropriate algorithms, including decision trees and k-means clustering, to the data.

After applying the appropriate algorithm, the algorithm groups the data objects based on the similarities and differences among them.

Unsupervised working

UNSUPERVISED Learning having main algorithm:

- Clustering: Clustering is a fundamental technique in unsupervised

machine learning that involves partitioning a dataset into distinct groups, or

clusters, such that items in the same cluster are more similar to each other

than to those in other clusters. Clustering helps in discovering inherent

structures in the data, making it useful for a variety of applications, from

market segmentation to anomaly detection.

- Sub-Types:

K-Means Clustering: Divides the data into K clusters by minimizing the variance within each cluster. It starts with K initial centroids and iteratively refines their positions based on the mean of the points in each cluster.

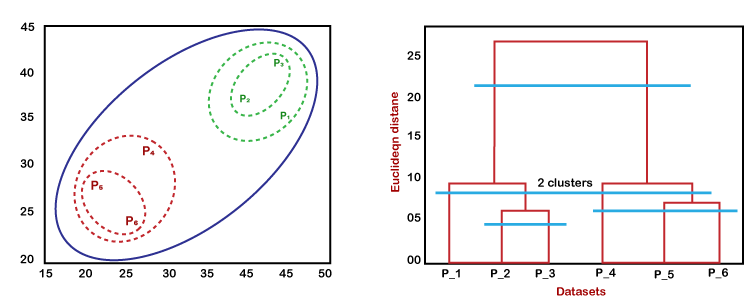

Hierarchical Clustering: A bottom-up approach where each data point starts in its own

cluster. Clusters are iteratively merged based on a similarity criterion until

a stopping condition is met (e.g., the desired number of clusters).

Applications :

- Anomaly detection: Unsupervised learning can identify unusual patterns or deviations from normal behaviour in data, enabling the detection of fraud, intrusion, or system failures.

- Scientific discovery: Unsupervised learning can uncover hidden relationships and patterns in scientific data, leading to new hypotheses and insights in various scientific fields.

- Recommendation systems: Unsupervised learning can identify patterns and similarities in user behaviour and preferences to recommend products, movies, or music that align with their interests.

- Image analysis: Unsupervised learning can group images based on their content, facilitating tasks such as image classification, object detection, and image retrieval.

Advantages:

- It does not require training data to be labelled.

- Capable of finding previously unknown patterns in data.

- Unsupervised learning can help you gain insights from unlabelled data that you might not have been able to get otherwise.

- Unsupervised learning is good at finding patterns and relationships in data without being told what to look for. This can help you learn new things about your data.

Disadvantages:

- Difficult to measure accuracy or effectiveness due to lack of predefined answers during training.

- The results often have lesser accuracy.

- The user needs to spend time interpreting and label the classes which follow that classification.

- Unsupervised learning can be sensitive to data quality, including missing values, outliers, and noisy data.

Difference between Supervised and Unsupervised Machine Learning:

|

Parameters

|

Supervised machine learning

|

Unsupervised machine learning

|

|

Input Data

|

Algorithms are trained using labeled data.

|

Algorithms are used against data that is not labeled

|

|

Computational Complexity

|

Simpler method

|

Computationally complex

|

|

Accuracy

|

Highly accurate

|

Less accurate

|

|

No. of classes

|

No. of classes is known

|

No. of classes is not known

|

|

Data Analysis

|

Uses offline analysis

|

Uses real-time analysis of data

|

|

Algorithms used

|

Linear and Logistics regression, Random forest,

multi-class classification, decision tree, Support Vector Machine, Neural

Network, etc.

|

K-Means clustering, Hierarchical

clustering, KNN,etc.

|

|

Output

|

Desired output is given.

|

Desired output is not given.

|

|

Training data

|

Use training data to infer model.

|

No training data is used.

|

|

Example

|

Example: Optical character recognition.

|

Example: Find a face in an image.

|

[1] https://www.javatpoint.com/unsupervised-machine-learning

.png)

.png)